Rook Add-On

The Rook add-on creates and manages a Ceph cluster along with a storage class for provisioning PVCs. It also runs the Ceph RGW object store to provide an S3-compatible store in the cluster.

The EKCO add-on is recommended when installing Rook. EKCO is responsible for performing various operations to maintain the health of a Ceph cluster.

Host Package Requirements

The following host packages are required for Red Hat Enterprise Linux 9 and Rocky Linux 9:

- lvm2

Advanced Install Options

spec:

rook:

version: latest

blockDeviceFilter: sd[b-z]

cephReplicaCount: 3

isBlockStorageEnabled: true

storageClassName: "storage"

hostpathRequiresPrivileged: false

bypassUpgradeWarning: false

minimumNodeCount: 3

| Flag | Usage |

|---|---|

| version | The version of rook to be installed. |

| storageClassName | The name of the StorageClass that will use Rook to provision PVCs. |

| cephReplicaCount | Replication factor of ceph pools. The default is to use the number of nodes in the cluster, up to a maximum of 3. |

| minimumNodeCount | The number of nodes required in a CephCluster to trigger a distributed storage migration. Must be set to a value greater than 3. |

| isBlockStorageEnabled | Use block devices instead of the filesystem for storage in the Ceph cluster. This flag will automatically be set to true for version 1.4.3+ because block storage must be enabled for these versions. |

| isSharedFilesystemDisabled | Disable the rook-ceph shared filesystem, reducing CPU and Memory load by no longer needing to schedule several pods. 1.4.3+ |

| blockDeviceFilter | Only use block devices matching this regex. |

| hostpathRequiresPrivileged | Runs Ceph Pods as privileged to be able to write to hostPaths in OpenShift with SELinux restrictions. |

| bypassUpgradeWarning | Bypass upgrade warning prompt. |

| nodes | Override default settings to use all nodes and devices with individual settings per node. Must be a CephCluster CRD nodes array as described in the Node Settings section of the CephCluster CRD Rook documentation. |

System Requirements

The following ports must be open between nodes for multi-node clusters:

| Protocol | Direction | Port Range | Purpose | Used By |

|---|---|---|---|---|

| TCP | Inbound | 9090 | CSI RBD Plugin Metrics | All |

The /var/lib/rook/ directory requires at least 10 GB space available for Ceph monitor metadata.

Block Storage

Rook versions 1.4.3 and later require a dedicated block device attached to each node in the cluster. The block device must be unformatted and dedicated for use by Rook only. The device cannot be used for other purposes, such as being part of a Raid configuration. If the device is used for purposes other than Rook, then the installer fails, indicating that it cannot find an available block device for Rook.

For Rook versions earlier than 1.4.3, a dedicated block device is recommended in production clusters.

For disk requirements, see Add-on Directory Disk Space Requirements.

You can enable and disable block storage for Rook versions earlier than 1.4.3 with the isBlockStorageEnabled field in the kURL spec.

When the isBlockStorageEnabled field is set to true, or when using Rook versions 1.4.3 and later, Rook starts an OSD for each discovered disk.

This can result in multiple OSDs running on a single node.

Rook ignores block devices that already have a filesystem on them.

The following provides an example of a kURL spec with block storage enabled for Rook:

spec:

rook:

version: latest

isBlockStorageEnabled: true

blockDeviceFilter: sd[b-z]

In the example above, the isBlockStorageEnabled field is set to true.

Additionally, blockDeviceFilter instructs Rook to use only block devices that match the specified regex.

For more information about the available options, see Advanced Install Options above.

The Rook add-on waits for the dedicated disk that you attached to your node before continuing with installation. If you attached a disk to your node, but the installer is waiting at the Rook add-on installation step, see OSD pods are not created on my devices in the Rook documentation for troubleshooting information.

Filesystem Storage

By default, for Rook versions earlier than 1.4.3, the cluster uses the filesystem for Rook storage. However, block storage is recommended for Rook in production clusters. For more information, see Block Storage above.

When using the filesystem for storage, each node in the cluster has a single OSD backed by a directory in /opt/replicated/rook/.

We recommend a separate disk or partition at /opt/replicated/rook/ to prevent a disruption in Ceph's operation as a result the root partition running out of space.

Note: All disks used for storage in the cluster should be of similar size. A cluster with large discrepancies in disk size may fail to replicate data to all available nodes.

Shared Filesystem

The Ceph filesystem is supported with version 1.4.3+.

This allows the use of PersistentVolumeClaims with access mode ReadWriteMany.

Set the storage class to rook-cephfs in the PVC spec to use this feature.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cephfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: rook-cephfs

Per-Node Storage Configuration

By default, Rook is configured to consume all nodes and devices found on those nodes for Ceph storage.

This can be overridden with configuration per-node using the rook.nodes property of the spec.

This string must adhere to the nodes storage configuration spec in the CephCluster CRD.

See the Rook CephCluster CRD Node Settings documentation for more information.

For example:

spec:

rook:

nodes: |

- name: node-01

devices:

- name: sdb

- name: node-02

devices:

- name: sdb

- name: sdc

- name: node-03

devices:

- name: sdb

- name: sdc

To override this property at install time, see Modifying an Install Using a YAML Patch File for more details on using patch files.

Upgrades

It is now possible to upgrade multiple minor versions of the Rook add-on at once. This upgrade process will step through minor versions one at a time. For example, upgrades from Rook 1.0.x to 1.5.x will step through Rook versions 1.1.9, 1.2.7, 1.3.11 and 1.4.9 before installing 1.5.x. Upgrades without internet access may prompt the end-user to download supplemental packages.

Rook upgrades from 1.0.x migrate data off of any filesystem-based OSDs in favor of block device-based OSDs. The upstream Rook project introduced a requirement for block storage in versions 1.3.x and later.

Monitoring

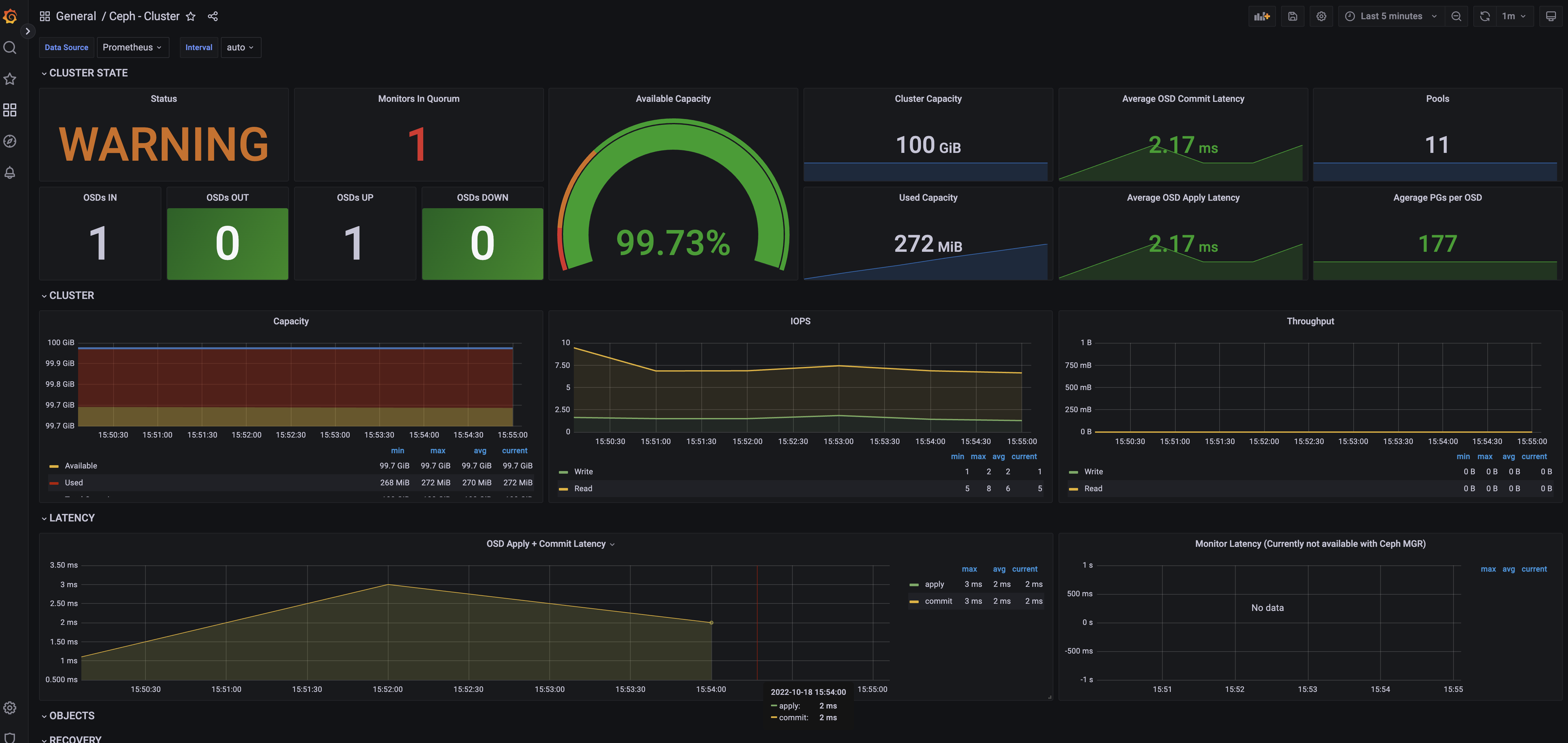

For Rook version 1.9.12 and later, when you install with both the Rook add-on and the Prometheus add-on, kURL enables Ceph metrics collection and creates a Ceph cluster statistics Grafana dashboard.

The Ceph cluster statistics dashboard in Grafana displays metrics that help you monitor the health of the Rook Ceph cluster, including the status of the Ceph object storage daemons (OSDs), the available cluster capacity, the OSD commit and apply latency, and more.

The following shows an example of the Ceph cluster dashboard in Grafana:

To access the Ceph cluster dashboard, log in to Grafana in the monitoring namespace of the kURL cluster using your Grafana admin credentials.

For more information about installing with the Prometheus add-on and updating the Grafana credentials, see Prometheus Add-on.